In 1999, we started

working on color omnidirectional vision to provide our

Robocup robot players with a fast sensor providing information

about what happens all around them. The sensor has to track in real time (at most 50 ms per frame):

7 robots moving at a speed up to 2 m/sec, a ball, a referee, and a set of few tens of environmental

features used for self-localization.

Traditional, single-shaped, mirrors used in omnidirectional vision are

not suited since we need to include in the image at the same time

objects at a large distance (14 mt.) - effect usually

obtained by conic, parabolic and hyperbolic mirrors - and ball and robots close

to the robot body, activity possible, e.g., with spherical mirrors. Therefore, we

have designed multi-shape mirrors to obtain the desired resolution in any part

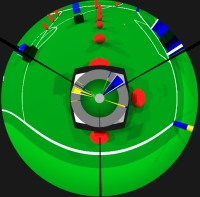

of the image. Here is the image coming from one of our first mirrors, obtained by merging

a central truncated sphere and a cone. On the left of the title, the synthetic

image of one of our last mirrors, exploiting high resolution in relatively short

distances, and lower resolution for larger and higher objects at a greater distance.

|

|

|

|

The image is subsampled by considering 3x3 pixel crosses, called receptors, arranged in circumferences on the image. Color values are averaged among the pixels belonging to a receptor. Image analysis is done in three steps:

- Analysis of thereceptors (3x3 pixel crosses) distributed in the

image such that any object at least large as the ball, at any

distance within the sensor range, should be covered by at least

one receptor (upper figure). For each receptor, we compute its

normalized color features. This provides for robustness with respect

to noise and lighting conditions.

- Grouping

of receptors with similar color features in blobs (in different

colors in the upper figure).

- Blob analysis to recognize known objects; in the lower figure, a robot, the ball, distances to walls and position of the goal

|

| The

whole process takes enough time to acquire and analyze up to 20 frames

per second, also on the AMD K6 300 MHz that we had on the former robots,

under Linux. You may have an idea of the reactivity of our first implementation

of this system by looking at the movie

of our first robot playing with A. Bonarini (April 1999). Other movies

of the new robots (three times faster) are available from our movie

page. |

|

|

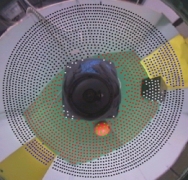

Designing mirrors to match the user needs is a research line now pursued by many people around the world. We have pushed further in this direction. D.

Sorrenti and F. Marchese have defined a mirror that does not distort the image of objects at the ground level. It is thus possible to exploit the maximun precision available from the camera to evaluate distance and direction of objects on the floor. As you can see from the image on the left, also this is a multi-part mirror, where the closer objects are projected in the outer part, exploiting higher resolution.

|

|

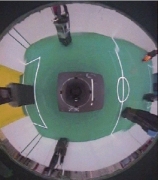

We have adopted these techniques together with those

developed

for the standard COPIS, in an industrial project funded by ENEA,

and aimed at the development of a robot operating in an industrial

environment. On the left, a picture taken in such an environment,

where pipes, heat exchanger and a door open to the sunny outside

are visible.

We are also adopting the same techniques in MADSys, another project, co-funded by the Italian Ministry for Instruction University and Research, aimed at the development of an environment to support the implementation of embodied multi-agent systems.

|

Principal investigators

A. Bonarini,

F. Marchese,

M. Restelli,

D. Sorrenti

Research contributors

P. Aliverti, S. Brusamolino, R. Cipriani, F. Cuzzotti, E. Grillo, M. Lucioni

Related Papers

Bonarini, A., (2000) The Body, the Mind or the Eye, first? In M. Veloso,

E. Pagello, A. Asada (Eds), Robocup99 - Robot Soccer World Cup III,

Springer Verlag, Berlin, D, 40-45.

file

.ps.zip

Bonarini A., Aliverti, P., Lucioni, M. (2000). An omnidirectional sensor

for fast tracking for mobile robots. IEEE Transactions on Instrumentation

and Measurement. 49(3). 509-512.

file

Copis-IMTC99.pdf)

Lima P., Bonarini A., Machado C., Marchese F. M., Marques C., Ribeiro

F., Sorrenti D. G., (2001), Omnidirectional catadioptric vision for

soccer robots. International Journal of Robotics and Autonomous Systems,

36(2-3), 87-102

|